In large-scale, real-time data environments, uninterrupted service is non-negotiable. At Trendyol, the Data Streaming team is responsible for maintaining robust, high-throughput Kafka clusters that power the organization’s real-time data flows. With approximately 30 stretched Kafka clusters processing 270 billion messages and 900 terabytes of data daily—while maintaining an average produce latency of just 36 milliseconds—system reliability and agility are paramount.

To adapt to evolving infrastructure requirements, such as operating system updates, image upgrades, network changes, or hypervisor migrations, Trendyol has engineered a fully automated process for replacing key Kafka components, including brokers, KRaft controllers, Schema Registry, Kafka Connect, and ksqlDB servers. The process is designed to ensure these critical changes occur without service disruption, embodying the philosophy of “changing engines mid-flight.”

Treating Kafka Components as Replaceable

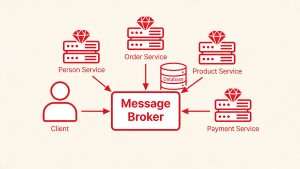

Trendyol’s approach treats every Kafka cluster as a modular system, where components can be safely swapped out—even while the system is live. This mindset drives the development of automated workflows that minimize human intervention and risk. The Data Streaming team leverages AWX Tower workflow templates to orchestrate these replacements, ensuring consistency and reliability at every step.

Automated Kafka Broker Replacement Workflow

Understanding the Broker’s Role

Kafka brokers are the backbone of any cluster, handling message storage, replication, and distribution. Because brokers are stateful, replacing them requires careful orchestration to prevent data loss or service impact.

Step-by-Step Broker Replacement

- Draining the Broker:

Similar to Kubernetes’ drain and cordon operations, the process begins by moving all replicas from the target broker to other brokers in the cluster. Confluent Kafka’skafka-remove-brokersCLI tool automates this, ensuring:- Replicas are safely transferred

- The broker is excluded from cluster metadata

- The shutdown is handled gracefully

kafka-remove-brokers --bootstrap-server broker1:9091,broker2:9091 \ --command-config ./client.properties --delete --broker-id 8The tool leverages Confluent’s Self Balancing Cluster (SBC) to manage replica movement and broker exclusion seamlessly. - Open-Source Alternative:

For organizations using open-source Kafka, LinkedIn’s Cruise Control offers broker decommissioning. However, an additional step is required: unregistering the broker from KRaft controllers using thekafka-clusterCLI.Example Command:textkafka-cluster unregister --id 8 --bootstrap-server broker1:9091 \ --config ./client.propertiesAfter unregistering, the broker can be safely shut down. - Applicability:

This workflow supports both scaling up (adding brokers) and scaling down (removing brokers), making it versatile for various operational needs.

Infrastructure Management and Automation

Once a broker is removed and shut down, infrastructure changes can be performed—such as OS patching, image upgrades, or network modifications. Trendyol uses Terraform for server provisioning, streamlining the replacement process.

Terraform Taint:

The terraform taint command marks a server for recreation during the next terraform apply, ensuring the new instance is provisioned with the latest configurations.

Example Script

for state in $(terraform state list "module.kafka-cluster-01.module.broker.module.servers[0]"); do

echo "Tainting state: $state"

terraform taint "$state"

doneStateless Component Replacement:

Components like KRaft controllers, Schema Registry, Kafka Connect, and ksqlDB are stateless and can be safely shut down and reprovisioned. The only caveat is to replace the leader KRaft controller last to maintain cluster coordination.

Future Enhancements and Scaling

Broker removal can be time-intensive due to the data reassignment process. Trendyol is exploring advanced features to further streamline operations:

- Tiered Storage:

Moves older data to object storage (e.g., Amazon S3) while retaining recent data on local disks, reducing broker load during replacements. - Diskless Topics (KIP-1150):

Enables storing topic data directly in external storage, making brokers effectively stateless and replacements even simpler. - AI-Powered Terraform Reviews:

Integrating AI agents to review Terraform changes for improved automation and risk mitigation. - Autoscaling Kafka Clusters:

Building on these workflows, Trendyol aims to support fully automated scaling, adapting to changing workloads without manual intervention.

Conclusion

Trendyol’s automated Kafka broker replacement workflow demonstrates that it is possible to upgrade and maintain mission-critical streaming infrastructure without downtime. By treating Kafka components as replaceable modules and leveraging automation tools like AWX Tower and Terraform, the Data Streaming team ensures seamless, safe, and efficient operations—even as the underlying engines change mid-flight. This approach sets a new standard for operational resilience in high-volume, real-time data environments.

Read more such articles from our Newsletter here.