Artificial intelligence and machine learning have rapidly transformed industries, delivering measurable value across sectors such as healthcare, finance, logistics, and communications. However, ensuring the reliability and security of AI-driven systems presents unique challenges. Quality assurance (QA) for AI and machine learning is evolving swiftly, especially in data-sensitive environments where robust validation is crucial to safeguard infrastructure and critical operations.

Understanding the Machine Learning Model Lifecycle

From a QA perspective, the machine learning model lifecycle encompasses three primary stages:

- Preparation: Data is collected, cleaned, and organized to facilitate effective analysis and modeling.

- Experimentation: Features are engineered, and models are designed, trained, validated, and fine-tuned before deployment.

- Deployment: Validated models are integrated into production systems, where they are monitored for accuracy and reliability.

Each stage demands targeted QA practices, as the focus shifts from data integrity to model performance and, finally, to system reliability in real-world use.

Data Quality Assurance in Machine Learning

High-quality data is the bedrock of successful machine learning initiatives. Inadequate or flawed data can undermine even the most sophisticated models. QA processes should address:

- Dataset Validation: Checking for duplicates, missing values, syntax and format errors, and semantic inconsistencies.

- Statistical Analysis: Identifying anomalies and outliers that could skew model performance.

- Project-Specific Testing: Tailoring data validation strategies to the unique requirements of each project.

While much of data QA remains manual, tools such as Deequ, Great Expectations, and TensorFlow Data Validation are increasingly used to automate and standardize these checks.

Model Testing: Treating Models as Applications

Effective QA treats machine learning models as full-fledged applications, emphasizing comprehensive testing at every level:

- Unit Testing: Ensuring that individual model components function correctly across a wide range of scenarios.

- Dataset Testing: Verifying that training and testing datasets are statistically equivalent to prevent misleading results.

- Feature Testing: Assessing which features most significantly impact model predictions, especially when comparing multiple models.

- Security Testing: Protecting models from adversarial attacks, such as nematodes, which can cause misclassification or erroneous outputs.

- Bias Testing: Detecting and mitigating biases—selection, framing, systematic, and personal perception—that may distort model outcomes.

Automated tools like Pymetrics and Amazon SageMaker Clarify are increasingly used to streamline bias detection and explainability, though manual review remains essential for nuanced cases.

System-Level Testing in Complex IT Environments

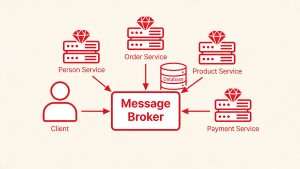

Modern machine learning models are rarely standalone; they operate within broader IT ecosystems. QA strategies should include:

- Integration Testing: Verifying that models interact correctly with other system components and APIs.

- System Testing: Evaluating the performance, security, and user interface of the entire application, both manually and through automated suites.

- Fault Tolerance and Compatibility: Running incorrect or edge-case datasets to assess system resilience and compatibility across environments.

These practices ensure that models deliver consistent value as part of larger, mission-critical solutions.

Continuous Testing After Production Deployment

Unlike traditional software, machine learning models require ongoing validation after deployment. As real-world data evolves, models may experience data drift, leading to degraded performance. Effective post-deployment QA includes:

- Monitoring and Logging: Tracking model accuracy and performance to identify issues promptly.

- A/B Testing: Comparing different model versions to select the most effective solution for production.

- Automated Continuous Testing: Leveraging CI/CD pipelines to streamline model updates, testing, and redeployment.

Proactive monitoring and rapid iteration help maintain high standards of accuracy and reliability in dynamic environments.

Key Takeaways for QA in AI and Machine Learning

- Prioritize data quality by visualizing, validating, and continuously monitoring datasets for anomalies and bias.

- Approach model QA with the same rigor as traditional software, incorporating comprehensive testing at both component and system levels.

- Maintain ongoing validation after deployment to address data drift and evolving real-world conditions, supported by robust monitoring and CI/CD practices.

Conclusion

Quality assurance for AI and machine learning is a rapidly maturing discipline. As organizations increasingly rely on intelligent systems, robust QA processes are essential to ensure data integrity, model reliability, and system security. By adopting a holistic approach—spanning data validation, model testing, system integration, and continuous monitoring—businesses can unlock the full potential of AI while minimizing risks and maximizing value.

Read more such articles from our Newsletter here.