DevOps teams focus on reliable releases, faster delivery cycles, and reduced errors. Decision makers, on the other hand, seek a process that coordinates development tasks, ensures code quality, and limits downtime. While the end goals remain the same, the perspectives, individual approach, and systems differ for both.

This article will explain the essentials of CI/CD pipelines in a way that suits both perspectives. You will see how each step; continuous integration, continuous delivery, and continuous deployment fits into an overarching scheme. The article explores why these practices matter, which tools are common, how pipelines are assembled, and what benefits emerge for organizational goals. By the end of the article, you will have a clear viewpoint on the future implications for businesses and technical teams.

Let’s begin:

What is Continuous Integration (CI)?

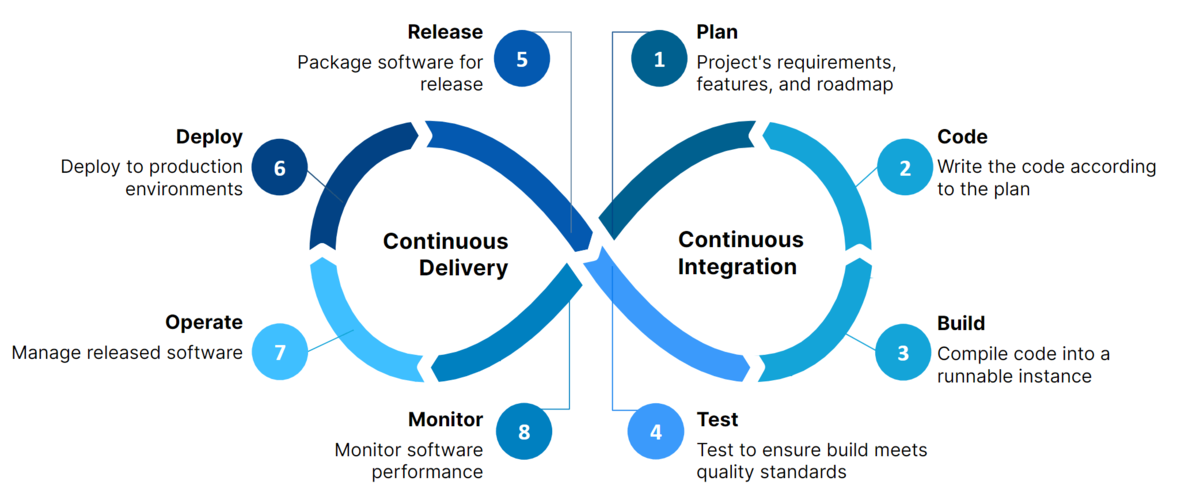

Continuous integration means each developer merges code updates to a shared branch in small increments. The goal is to prevent large merges that lead to conflicts. CI tools detect new commits in version control and then perform an automated process:

- Fetch code from the repository.

- Build the application.

- Run tests to confirm functionality.

A typical CI workflow includes a version control system for storing code, a build system for compiling it, and test suites that confirm functionality. When new code is pushed, the CI process starts. If any step fails, the pipeline halts, and the developer who introduced the change can fix the error. If everything passes, the code is deemed stable. This consistent cycle of build and test helps maintain reliability and keeps the main branch in good shape.

With frequent commits, teams stay aligned and avoid diverging branches that are hard to reconcile. When conflicts do arise, they are smaller and simpler to resolve. Everyone gets rapid feedback on potential defects, so they do not accumulate.

Teams can see which commits caused issues by merging code in small increments while the effort to address them is limited to a smaller scope. The end result? A more stable foundation of code, with continuous checks on quality and functionality.

What is Continuous Delivery (CD)?

Continuous delivery is a step that comes after continuous integration. Once code has passed CI checks, it is ready for further validation in environments that closely resemble production. Teams confirm that the code works at scale, integrates with external services, and meets performance requirements. Here, the core idea is to ensure that the software is always ready to be deployed whenever the organization decides.

Developers often set up multiple environments to handle this. For instance, a testing environment receives the latest application build. Automated tests run to verify end-to-end scenarios, user interface flows, performance metrics, or security checks. If issues appear, the pipeline blocks the release, and alerts go out to fix the bugs.

Continuous delivery aims for a release-ready state. It does not mean every change is released immediately to users, but it means each successful build can be pushed to production on short notice. Organizations value this because it lowers the cost of releasing new functionality. Instead of big-bang rollouts, they ship incremental improvements. That style cuts down on last-minute surprises and large, stressful merges. Smaller changes are easier to trace, debug, and roll back if needed.

While continuous integration ensures code is properly merged and tested at the unit and integration level, continuous delivery focuses on making sure features are stable across broader checks and more realistic environments. It also enforces a consistent process for packaging and preparing software. The final choice to go live might be a manual trigger, but the pipeline handles the hard work of verifying that code stands up to production conditions.

What is Continuous Deployment?

Continuous deployment adds to continuous delivery. Instead of stopping before the production environment and requiring a human release decision, it goes further. If a change passes all required checks and tests, it automatically transitions into production. There is no separate manual gate. The pipeline itself decides that the release can happen.

This approach reduces delays between development and actual user availability. Some teams deploy changes several times a day without scheduling a formal release window. As soon as code clears the pipeline, the user-facing system updates. This method demands a strong safety net of automated tests, robust monitoring, and fast rollback mechanisms.

Failures must be caught early, because there is little buffer between development and production. This makes high confidence in testing essential. Teams also need ways to respond if a problem arises. Production monitoring must catch anomalies, and releases should be small and frequent to isolate what triggered the error. Hence, many organizations combine canary releases or phased rollouts with continuous deployment. These strategies push updates to a subset of users first. If metrics remain normal, the update proceeds to all users.

Continuous deployment is a good fit for scenarios that demand rapid iteration and stable automation. Its examples include platforms delivering continuous enhancements, or businesses that want to remove friction from shipping new code. It eliminates wait times for formal approvals, speeds up feedback from real usage, and encourages agile collaboration. However, it also requires that teams feel comfortable with minimal manual checkpoints. For certain regulated environments, or more cautious release models, continuous delivery might suffice. But when a team’s confidence and processes mature, continuous deployment gives the fastest path from idea to production.

What are CI/CD Pipelines?

A CI/CD pipeline is the structured process that connects the distinct phases of integrating, testing, delivering, and potentially deploying code. It is usually defined in a configuration file that sets the order of actions: fetch the latest code, compile, run unit tests, run additional checks, package artifacts, perform integration or system tests, and either stage or deploy the final result.

The pipeline runs automatically whenever new code is committed. Each step outputs logs and results to show if it succeeded or failed. If a step fails, the pipeline stops at that point. This ensures no further stage receives a flawed artifact. If all steps pass, code moves forward until it is declared ready.

A pipeline includes these stages:

- Source and build: The pipeline fetches code, installs dependencies, and compiles the project.

- Initial tests: Unit tests and code linting confirm that newly introduced code meets basic standards.

- Integration tests: The pipeline merges the build with dependent services or components.

- Packaging: The pipeline generates artifacts, containers, or other deployable forms.

- Staging environment: The pipeline sets up a near-production environment. End-to-end tests and performance checks run here.

- Manual or automatic approval: Either a team member triggers release or the pipeline moves forward without manual intervention.

- Production deployment: The pipeline deploys the new release.

- Post-deployment checks: Automated scripts confirm that essential indicators are healthy.

Each stage gives the team visibility into the state of the code. If a step fails, the pipeline halts and sends alerts. That immediate feedback loop shortens debugging time and keeps flawed releases from progressing. Everyone sees the pipeline status and can review logs for debugging. This reduces guesswork. The pipeline’s structure also supports consistency. No matter who commits the code, the same sequence applies, which standardizes release practices across the team. This uniform approach removes many manual steps. It also keeps a living record of quality, so any error or regression can be caught quickly.

The Benefits of CI/CD Implementation

Let us go through condensed understanding of benefits of implementing continuous integration and continuous delivery:

- Faster delivery

Frequent merges and automated testing reduce the time spent waiting for large releases. When code is integrated in small batches, releases can happen more often. This approach lets teams respond to market demands or bug reports with short turnaround.

- Predictable quality

Automation detects if a build fails essential tests. Teams see issues early, so they can fix them immediately. This continuous feedback leads to more stable code. The pipeline is consistent, so the release path rarely deviates.

- Reduced integration friction

Traditional release models store changes in feature branches for weeks. That leads to big conflicts when merging. CI/CD enforces frequent merges, so conflicts are smaller. Teams spend less time resolving version-control tangles.

- Lower risk of production failures

Automated tests, security scans, and code quality checks catch vulnerabilities or logic problems. If a flaw surfaces, it appears in the pipeline logs, not after an official launch. This reduces last-minute chaos in production.

- Shorter feedback loops

When new changes deploy often, product owners and stakeholders see results quickly. They can give feedback sooner. That helps refine features without waiting for a multi-month schedule. Code changes remain fresh in developers’ minds, so addressing feedback is simpler.

- Better resource allocation

Many tasks that were manual (build, test, configuration) become automated. Engineers can spend more time on strategic work. Efficiency gains can lead to cost savings. Repetitive steps no longer require human oversight, which curbs errors and speeds up development.

- Easier rollback

Pipelines maintain a record of successful artifacts. If the current release causes errors, teams can revert to a previous stable build with minimal effort. This approach lowers downtime because the pipeline can switch versions quickly.

- Clear traceability

Every commit, test result, and deployment action is tracked. The pipeline logs show which commit triggered a failing test or introduced a production incident. That transparency helps with compliance and audits. It also makes retrospectives more data-driven.

What are Some Common CI/CD Tools?

Here’s a list of popular CI/CD tools for your perusal. Each one addresses similar goals but differs in setup, hosting, interface, and pricing. Factors to be considered include existing version control platforms, hosting preferences, budget, and specialized requirements (e.g., large monorepos, multi-cloud needs, or advanced security scanning):

- Jenkins

Jenkins is open source and self-hosted. It has a large plugin ecosystem. Teams can customize it for different languages, databases, or platforms. Jenkins can run on local servers or in cloud environments. It is flexible and popular in many enterprises.

- GitLab CI/CD

GitLab integrates code hosting, issue tracking, and CI/CD. Pipeline definitions live in the repository. GitLab Runners execute jobs in Docker containers or on virtual machines. GitLab also supports container registries, making it a single-stop solution for version control and CI/CD.

- GitHub Actions

GitHub Actions are defined as workflows within the repository. They automate build, test, and deployment steps. Developers can choose from a marketplace of community actions or create custom actions. Triggers can be commits, pull requests, or scheduled events.

- CircleCI

CircleCI provides pipelines as a service. Configuration lives in a YAML file. CircleCI supports parallel job executions and caching to speed builds. Teams can view logs and artifacts through the CircleCI interface. It integrates well with GitHub and Bitbucket.

- Travis CI

Travis CI offers a straightforward configuration approach and is often used for open source projects. It supports many languages. The free tier is popular with smaller projects, while paid tiers serve private repositories.

- Azure Pipelines

Azure DevOps includes a pipelines service that can build and release projects. It supports various platforms, including containers, virtual machines, and code in different languages. It aligns well with Microsoft’s ecosystem but is not restricted to it.

- TeamCity

TeamCity by JetBrains is a CI server. It is known for advanced configuration and good integration with JetBrains IDEs. It supports cloud agents and a variety of build runners. It is often used in environments where a custom on-premises solution is desired.

- Bamboo

Bamboo is an Atlassian product. It connects with Bitbucket, Jira, and Confluence. It supports deployment projects, multi-stage builds, and agent management. It can run on-premises or in containerized environments.

What Makes a Good Pipeline?

An effective pipeline is dependable, clear, and adaptable to change.

Here’s more to it:

- Consistent process

A pipeline that always follows the same steps helps developers trust the outcomes. No matter who contributes code, the pipeline is uniform. That simplifies handoffs, audits, and troubleshooting.

- Fast feedback

Speed is critical. If a pipeline takes hours for a single commit, developers wait or switch tasks. By the time results arrive, they may have lost context. A pipeline that runs in minutes keeps the development flow uninterrupted.

- Quality tests

Automated tests must be reliable. Flaky tests erode confidence. Each step should run in a controlled environment, free of leftover data. Good test coverage matters, but coverage alone does not guarantee quality. Tests must reflect real scenarios and critical paths.

- Efficient structure

Teams often break tests into layers: short unit tests first, then integration or acceptance tests. If an early step fails, the pipeline stops. That saves compute time for expensive tests. Staging tasks in a sequence or in parallel can speed deliveries without losing clarity.

- Security and compliance

A pipeline is a gateway before production. Security scans can detect known vulnerabilities, outdated libraries, or configuration problems. Code scanning can verify compliance with internal or regulatory standards. With each commit, potential issues are flagged.

- Scalability

As a project grows, the pipeline might need to run more jobs concurrently. Tools that handle additional build agents or containers can expand capacity. This ensures that as the team grows, pipeline performance does not degrade.

- Clear error reporting

When the pipeline fails, developers need actionable details. Logs or test reports should pinpoint the problem. Vague “build failed” messages slow resolution. A pipeline that provides direct links to failing tests or code coverage reports speeds up fix times.

- Versioned configuration

Storing pipeline definitions in the repository helps track changes to the pipeline logic. If a pipeline breaks due to a script change, reverting is easy. Reviewing pipeline changes also ensures that no one modifies critical steps without oversight.

Conclusion

CI/CD began as a way to solve challenges around integrating code changes and verifying readiness, and it has evolved into a central component of modern development.

Over time, teams realized that small, frequent merges and automated checks form a reliable engine for shipping software at a quicker cadence. As systems keep growing more complex, the need for consistent, automated, and traceable processes will keep rising.

The fundamental mechanics: merging often, testing automatically, deploying code in small increments; form a predictable workflow that suits various development methods. This approach fits the need for speed while keeping risk manageable. It also aligns with broader goals of cost management, clear quality metrics, and the capacity to adapt to shifting product demands.

Looking ahead, these pipelines will likely integrate more specialized checks. Monitoring and automated rollbacks will gain prominence as systems scale. Decision makers who prioritize swift iteration and stable operations will keep building out these processes. As the volume and complexity of software grows, structured pipelines and robust testing remain the best path to stable deployments. Over time, that approach lets organizations respond faster, maintain quality, and reduce firefighting in production.