Artificial Intelligence (AI) has evolved from a research curiosity to a business-critical technology driving decisions in healthcare, finance, law, and even government systems. However, as machine learning models become more complex with millions of parameters inside deep neural networks, understanding why a model made a specific prediction has become increasingly difficult.

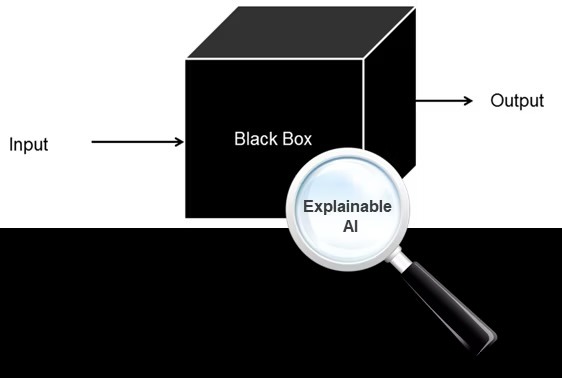

This “black-box problem” poses a major challenge: if a model predicts a patient has a high risk of heart disease or rejects a loan application, can we explain why? This is where Explainable AI (XAI) steps in.

Explainable AI refers to a set of techniques and methods that make the decision-making process of AI systems transparent, interpretable, and trustworthy. It allows humans to understand the reasoning behind AI predictions—making them more accountable, fair, and compliant with ethical and regulatory standards.

In this blog, we’ll explore how XAI works, why it’s essential, the key methods used in modern systems, and how you can implement explainability using real Python code.

Understanding the Basics of Explainable AI

At its core, Explainable AI aims to bridge the gap between accuracy and interpretability. Traditional machine learning models like Linear Regression or Decision Trees are inherently explainable because their decisions are based on explicit mathematical relationships. But modern AI systems, especially deep learning networks are highly non-linear and opaque.

Two Approaches to Explainability

- Intrinsic Explainability – The model itself is interpretable.

Examples include decision trees, linear models, and rule-based systems. - Post-hoc Explainability – Explanations are derived after the model is trained.

Techniques like LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) help understand how input features influence predictions.

Example: A Simple Black-Box Model

Let’s start with a black-box model to see why we need explainability.

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

# Load dataset

data = load_boston()

X_train, X_test, y_train, y_test = train_test_split(data.data, data.target, test_size=0.2, random_state=42)

# Train a black-box model

model = RandomForestRegressor(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

# Make a prediction

prediction = model.predict([X_test[0]])

print(f"Predicted House Price: {prediction[0]}")The model predicts a house price—but gives no clue why. That’s where XAI techniques come in.

Difference Between Explainability, Interpretability, and Transparency

Though often used interchangeably, these concepts have distinct meanings in AI ethics and engineering:

- Explainability refers to the ability to describe the internal mechanics of a system in human terms. It’s the output of interpretability.

- Interpretability is about understanding the cause-and-effect relationships inside a model—how inputs influence outputs.

- Transparency describes how open or visible the system’s internal logic and parameters are.

A transparent model (like Linear Regression) directly exposes its coefficients. An interpretable model (like Decision Tree) can show feature splits. But a deep neural network might be accurate yet opaque, requiring post-hoc explainability to make sense of it.

For example:

- Linear Regression – High transparency, high interpretability

- Neural Network – Low transparency, low interpretability, needs XAI

Why Do We Need Explainable AI?

As AI systems increasingly affect human lives, trust becomes critical. Organizations, regulators, and users must be able to understand and justify automated decisions.

Here’s why XAI is essential:

- Regulatory Compliance – Laws like the EU’s GDPR and AI Act demand “right to explanation” for automated decisions.

- Bias Detection – Explainability helps identify when models make unfair or discriminatory predictions.

- Model Debugging – Engineers can better diagnose why models fail or overfit.

- User Trust – When users understand AI’s reasoning, adoption increases.

Imagine a loan approval system where the AI says, “Application rejected due to low credit score and high debt-to-income ratio.” This is an explainable decision. Without it, users may distrust or challenge the outcome.

Techniques and Methods Used in XAI

1. LIME (Local Interpretable Model-Agnostic Explanations)

LIME approximates a complex model with a simple, interpretable one (like linear regression) around a specific prediction.

import lime

import lime.lime_tabular

explainer = lime.lime_tabular.LimeTabularExplainer(

training_data=X_train,

feature_names=data.feature_names,

mode='regression'

)

exp = explainer.explain_instance(X_test[0], model.predict)

exp.show_in_notebook(show_table=True)This produces a visualization showing which features most influenced the specific prediction.

2. SHAP (SHapley Additive exPlanations)

SHAP uses game theory to attribute each feature’s contribution to the model’s output.

import shap

explainer = shap.TreeExplainer(model)

shap_values = explainer.shap_values(X_test)

# Visualize feature impact

shap.summary_plot(shap_values, X_test, feature_names=data.feature_names)This gives a powerful global and local view of how each feature contributes—positively or negatively—to predictions.

3. Feature Importance and Partial Dependence Plots (PDP)

from sklearn.inspection import plot_partial_dependence

import matplotlib.pyplot as plt

plot_partial_dependence(model, X_train, [0, 1], feature_names=data.feature_names)

plt.show()PDPs help visualize the effect of changing one or two features while keeping others constant.

Applications of Explainable AI

- Healthcare – Explaining why an AI predicted a disease risk allows doctors to make informed decisions rather than relying blindly on algorithms.

- Finance – XAI helps banks justify credit approvals, detect fraud, and comply with audit requirements.

- Autonomous Vehicles – Explainability can trace the logic behind steering, braking, or hazard detection decisions.

- Human Resources – When screening candidates using AI, companies can ensure decisions aren’t biased against gender or ethnicity.

- Cybersecurity – AI-powered intrusion detection systems can explain why a certain network behavior was flagged as suspicious.

Example: Explaining a Medical Diagnosis

import shap

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.datasets import load_breast_cancer

X, y = load_breast_cancer(return_X_y=True)

model = GradientBoostingClassifier().fit(X, y)

explainer = shap.Explainer(model, X)

shap_values = explainer(X)

shap.summary_plot(shap_values, X)The SHAP summary plot highlights which medical features (like “mean radius” or “texture”) drive the cancer prediction empowering doctors to validate model decisions.

Limitations of XAI

Despite its potential, XAI isn’t a silver bullet. Some limitations include:

- Approximation Error – Techniques like LIME or SHAP only approximate model behavior, not reproduce it exactly.

- Computation Overhead – Generating explanations for large datasets can be slow.

- Complexity of Explanations – Ironically, some “explanations” are still too technical for non-experts.

- Trade-off with Accuracy – More interpretable models (like Decision Trees) may sacrifice accuracy compared to deep learning.

For instance, explaining a 200-layer convolutional neural network might require simplifications that lose nuanced reasoning.

The Future of Explainable AI

The next phase of AI governance will emphasize responsibility, fairness, and interpretability as much as raw performance.

Emerging areas include:

- Causal XAI – Understanding not just what features influence outcomes but how they cause them.

- Counterfactual Explanations – Showing users what needs to change to alter an AI decision (“Your loan would be approved if income were $5,000 higher”).

- Human-AI Collaboration – Building hybrid systems where humans and AI explain decisions to each other.

As frameworks like TensorFlow, PyTorch, and Scikit-learn integrate explainability APIs, XAI is becoming an essential part of the ML pipeline.

Conclusion

Explainable AI (XAI) is not just a buzzword—it’s the backbone of ethical, transparent, and trustworthy AI systems. By making complex models more interpretable, it enables organizations to meet compliance requirements, uncover biases, and build user confidence.

From local explanation methods like LIME to game-theoretic approaches like SHAP, developers today have powerful tools to peer inside the black box of machine learning.

As AI adoption accelerates across industries, XAI ensures that the models shaping our world remain accountable, understandable, and human-centric.

Ultimately, the future of AI isn’t just about smarter algorithms—it’s about algorithms we can trust, explain, and learn from.