Artificial Intelligence has become an inseparable part of modern technology powering everything from recommendation engines to self-driving cars. But traditional AI models are often large, computationally expensive, and dependent on cloud servers for inference. This is where TinyML (Tiny Machine Learning) comes in. It is a paradigm shift that brings machine learning capabilities to low-power, resource-constrained devices, such as microcontrollers and IoT sensors.

TinyML enables intelligent data processing directly on devices (known as edge inference) without needing constant internet access or cloud computing. Imagine a smartwatch that detects irregular heartbeats, or a smart doorbell that identifies familiar faces all without sending data to the cloud.

TinyML combines machine learning, embedded systems, and IoT to deliver real-time, low-latency, privacy-preserving intelligence on edge devices. It’s already being adopted in industries like healthcare, manufacturing, automotive, and environmental monitoring. This makes AI not just smaller, but smarter and more efficient.

How TinyML Works

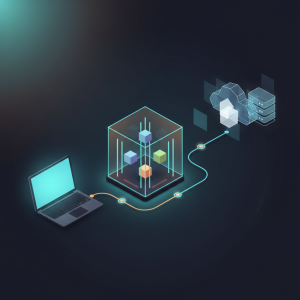

TinyML operates by training machine learning models on powerful machines (like laptops or cloud GPUs), then compressing and deploying them to edge devices with limited memory and processing power.

Here’s how the TinyML pipeline typically works:

- Model Training — Train a machine learning model using standard frameworks like TensorFlow or PyTorch.

- Model Optimization — Reduce model size via techniques like quantization, pruning, and knowledge distillation.

- Deployment — Convert and deploy the optimized model to embedded systems using tools like TensorFlow Lite for Microcontrollers or Edge Impulse.

- On-Device Inference — The device collects real-world sensor data (like motion or sound) and makes predictions locally.

Example: TinyML in Action (TensorFlow Lite Micro)

Here’s a simple example of how you might run an inference on a microcontroller using TensorFlow Lite for Microcontrollers (TFLM):

#include "tensorflow/lite/micro/all_ops_resolver.h"

#include "tensorflow/lite/micro/micro_interpreter.h"

#include "tensorflow/lite/schema/schema_generated.h"

#include "tensorflow/lite/version.h"// Example model data

extern const unsigned char model_tflite[];

const tflite::Model* model = tflite::GetModel(model_tflite);

tflite::MicroAllOpsResolver resolver;

constexpr int kTensorArenaSize = 8 * 1024;

uint8_t tensor_arena[kTensorArenaSize];

tflite::MicroInterpreter interpreter(model, resolver, tensor_arena, kTensorArenaSize, nullptr);

interpreter.AllocateTensors();

TfLiteTensor* input = interpreter.input(0);

input->data.f[0] = 0.75f; // Example input data

interpreter.Invoke();

float output = interpreter.output(0)->data.f[0];

Serial.println(output); // Print prediction resultThis example runs a lightweight ML model on a microcontroller, performing inference directly without any cloud dependency.

Benefits of TinyML

TinyML isn’t just about running small models — it’s about making AI practical in environments where cloud processing isn’t viable. Some of its key benefits include:

- Low Latency

TinyML performs inference locally, so there’s no need to send data to remote servers. This means instant responses critical for applications like gesture recognition or anomaly detection in machinery. - Reduced Power Consumption

Since TinyML models run on microcontrollers (often under 1 milliwatt of power), devices can run for months or even years on small batteries. - Privacy & Security

Sensitive data never leaves the device. For instance, a healthcare wearable can analyze biometric data without uploading it to external servers. - Offline Operation

TinyML enables AI applications in remote or offline environments from agriculture sensors in rural areas to wildlife monitoring systems in forests. - Scalability

TinyML solutions can be deployed across millions of low-cost IoT devices, each performing localized analytics efficiently.

Common Use Cases and Applications

TinyML is reshaping how AI is integrated into embedded and IoT systems. Let’s explore some real-world applications:

- Predictive Maintenance

Sensors attached to machinery can detect anomalies (like vibration or sound changes) in real-time, preventing costly breakdowns. - Smart Agriculture

Edge-based AI can monitor soil moisture, crop health, or livestock activity using microcontrollers, reducing dependence on internet connectivity. - Healthcare Wearables

TinyML enables ECG, heart rate, and sleep pattern monitoring without transferring data to the cloud ensuring both privacy and efficiency. - Environmental Monitoring

Low-power sensors can identify air quality levels, detect forest fires, or monitor wildlife movement autonomously. - Consumer Electronics

From voice-controlled remotes to smart appliances, TinyML adds intelligence to everyday devices with minimal hardware upgrades.

Example: Edge Inference with Python (Simulation)

While real TinyML runs on microcontrollers, we can simulate its behavior in Python using TensorFlow Lite:

import tensorflow as tf

import numpy as np

# Load a pre-trained model and convert it to TensorFlow Lite

model = tf.keras.Sequential([

tf.keras.layers.Dense(8, activation='relu', input_shape=(4,)),

tf.keras.layers.Dense(3, activation='softmax')

])

converter = tf.lite.TFLiteConverter.from_keras_model(model)

tflite_model = converter.convert()

# Run inference using the TFLite interpreter

interpreter = tf.lite.Interpreter(model_content=tflite_model)

interpreter.allocate_tensors()

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

test_input = np.array([[0.1, 0.2, 0.3, 0.4]], dtype=np.float32)

interpreter.set_tensor(input_details[0]['index'], test_input)

interpreter.invoke()

prediction = interpreter.get_tensor(output_details[0]['index'])

print("Predicted:", prediction)This shows how a regular ML model can be compressed into a TFLite format, a crucial step in deploying models to TinyML devices.

Challenges and Limitations

While TinyML holds immense promise, it’s not without challenges:

- Limited Processing Power – Microcontrollers can’t handle complex models like large CNNs or Transformers.

- Memory Constraints – Devices typically have less than 256KB of RAM, making model optimization essential.

- Model Accuracy Trade-offs – Reducing model size often leads to slight drops in prediction accuracy.

- Hardware Compatibility – Each embedded system (Arduino, STM32, ESP32) requires tailored deployment.

- Debugging Difficulty – Without proper tooling, debugging models on embedded devices can be tedious.

However, advancements in hardware accelerators and edge AI frameworks (like TinyMLPerf, Edge Impulse, and TensorFlow Lite Micro) are rapidly addressing these issues.

Conclusion

TinyML represents the next frontier of artificial intelligence — one where AI meets IoT to make devices intelligent, autonomous, and power-efficient. It bridges the gap between data collection and actionable insights by bringing machine learning directly to the edge.

By enabling real-time, offline intelligence, TinyML has opened doors to smarter wearables, safer factories, greener farms, and more connected cities. As frameworks evolve and hardware improves, developers can deploy increasingly complex AI solutions on even smaller devices.

In the future, we can expect TinyML to seamlessly integrate into daily life — from detecting anomalies in car engines to monitoring environmental changes — all without relying on the cloud.

The next wave of innovation won’t just be smart — it will be tiny, efficient, and everywhere.